Deploying DeepSeek-R1 on Azure - AI Foundry & Container Apps

DeepSeek-R1 is a high-performance AI model that requires significant computing power. In this guide, we'll explore two powerful ways to deploy it on Azure: Azure AI Foundry and Azure Container Apps with Serverless GPUs. These methods offer scalability, cost-efficiency, and ease of deployment, so you can focus on building amazing AI-powered applications.

Deploy DeepSeek-R1 on Azure AI Foundry

Why Choose Azure AI Foundry?

- Instant Model Access: No need to manage containers—just deploy with a few clicks.

- Scalable and Secure: Enterprise-grade security and auto-scaling.

- API Integration: Easily connect DeepSeek-R1 to your applications.

Step-by-Step Deployment

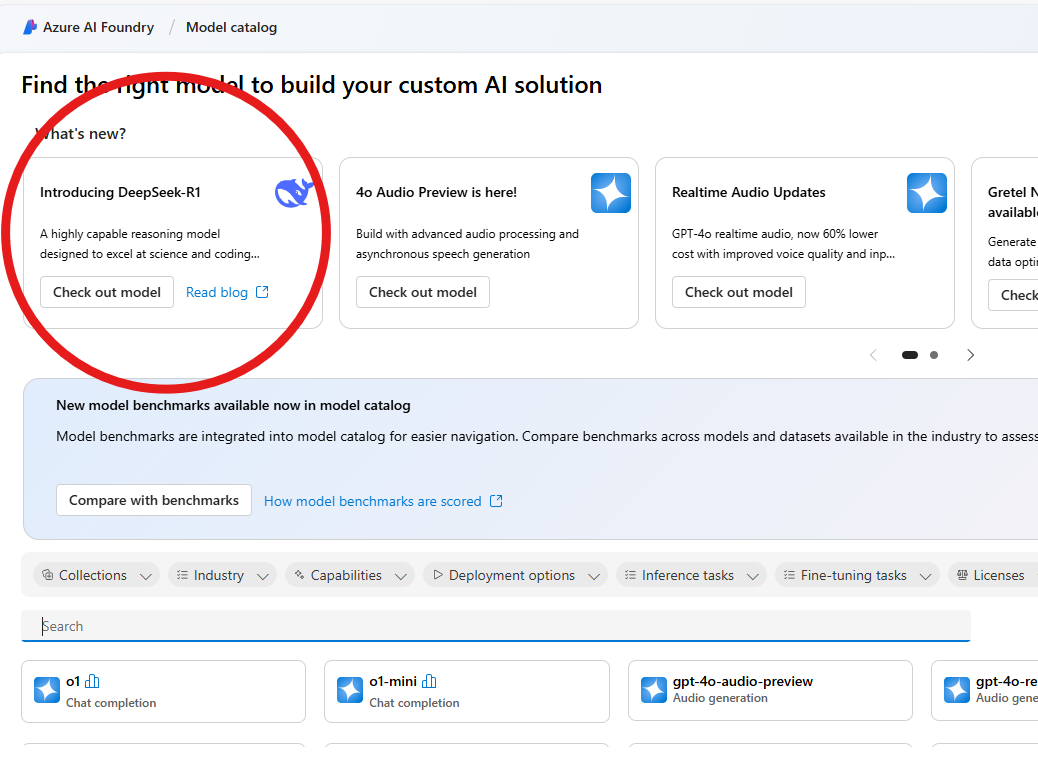

1. Access Azure AI Foundry

- Visit the Azure AI Foundry.

- Click the Check Out model button or search for DeepSeek R1 and open the model card.

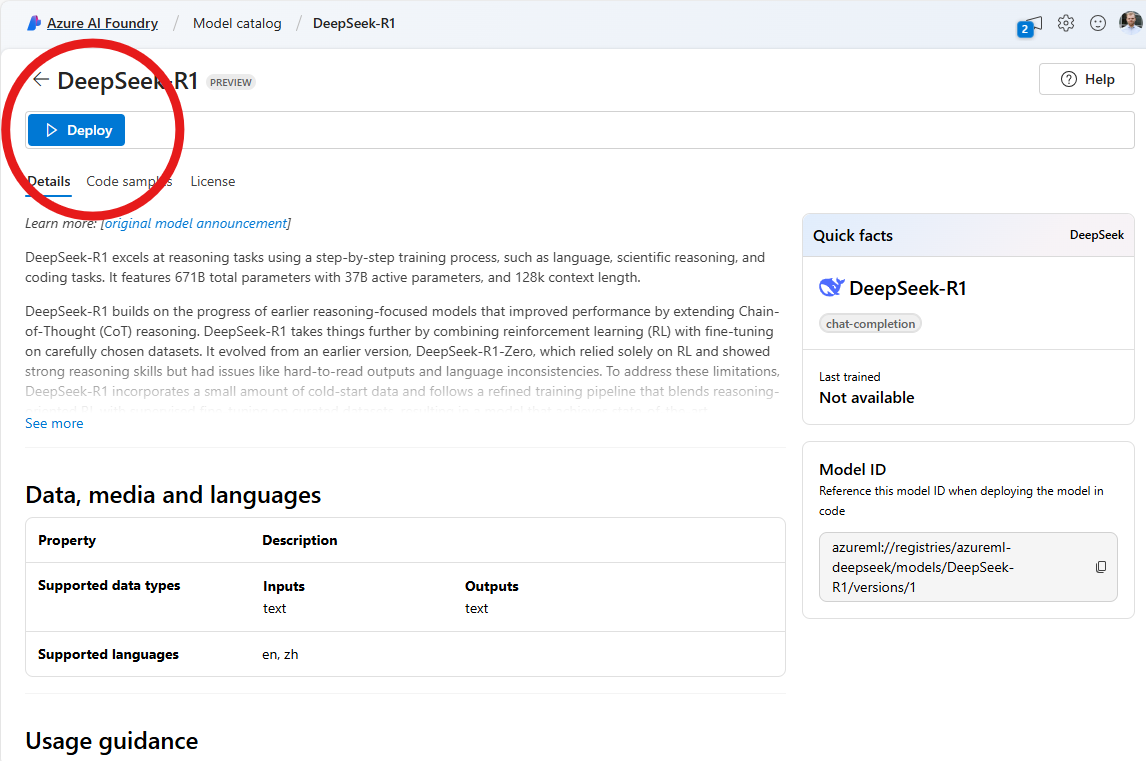

2. Deploy the Model

- Click the "Deploy" button.

- Select your Azure subscription and deployment region.

- Wait for the deployment to complete—Azure will provide an API endpoint and key.

3. Integrate DeepSeek-R1 into Your Application

- Use the API endpoint and key to send inference requests.

- Test responses in the built-in playground before integrating into production applications.

using Azure;

using Azure.AI.Inference;

var endpoint = new Uri("<ENDPOINT_URL>");

var credential = new AzureKeyCredential(System.Environment.GetEnvironmentVariable("AAD_TOKEN"));

var model = "DeepSeek-R1";

var client = new ChatCompletionsClient(

endpoint,

credential,

new ChatCompletionsClientOptions());

var requestOptions = new ChatCompletionsOptions()

{

Messages =

{

new ChatRequestUserMessage("I am going to Paris, what should I see?")

},

max_tokens = 2048,

Model = model

};

Response<ChatCompletions> response = client.Complete(requestOptions);

System.Console.WriteLine(response.Value.Choices[0].Message.Content);

Step-by-Step animation from Microsoft

Alternative: DeepSeek-R1 on Azure Container Apps?

Why Deploy DeepSeek-R1 on Azure Container Apps?

DeepSeek-R1 is a high-performance open-weight AI model, great for chatbots, summarization, and creative writing. But large models demand serious computing power. That’s where Azure Container Apps with serverless GPUs come in.

Instead of provisioning expensive GPU instances or wrangling Kubernetes, you just deploy your model in a container and let Azure handle the rest.

The benefits?

- Serverless GPUs: Automatically scales up when needed and scales down when idle.

- Cost-efficient: No wasted GPU time = No wasted money.

- Simple Deployment: No DevOps nightmares, just a few clicks (or commands).

Now, let’s deploy!

Before we launch 🚀

To speed up the model you can use Azure Container Apps with GPUs which are in preview. You’ll need to request quota access in supported regions: West US 3, Australia East, Sweden Central. Request access here. This is not required but highly recommended.

Step-by-Step: Deploying DeepSeek-R1 on Azure

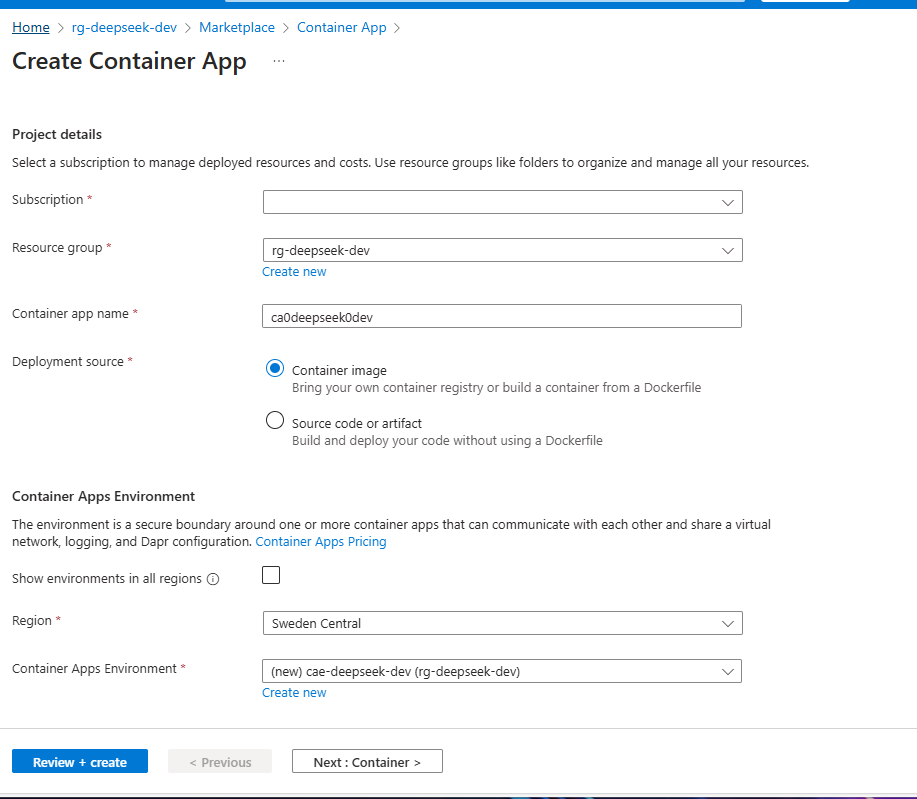

1. Create an Azure Container App

- Head over to portal.azure.com

- Click “Create a resource” and search for Azure Container Apps

- Select Container App and hit “Create”

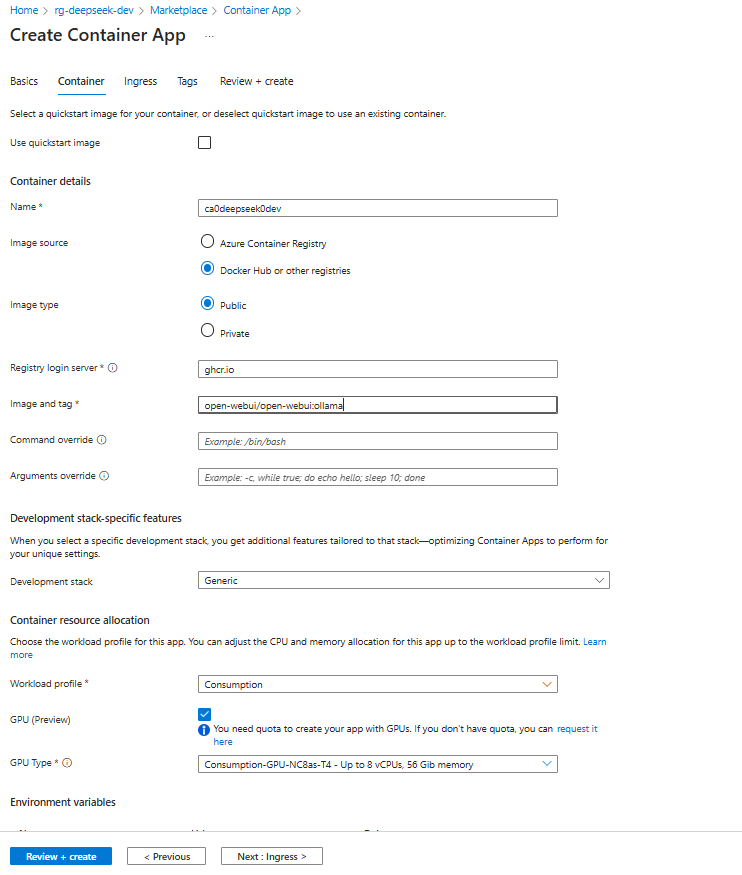

2. Configure Your App

- Choose Your Subscription and Resource Group

- Pick a Region (If you want to use a GPU it should West US 3, Australia East, or Sweden Central)

- Enter Container Details:

- Image Source: Docker Hub

- Registry Login Server:

ghcr.io - Image and Tag:

open-webui/open-webui:ollama

💡 Want faster startups? Host the image in Azure Container Registry (ACR).

💡 Want faster startups? Host the image in Azure Container Registry (ACR).

3. Enable GPU Power (If you use it)

- Choose "Consumption" as the workload profile

- Enable GPU (Preview)

- Select "T4" GPU

💡 If the GPU option isn’t available, check that you’ve requested access.

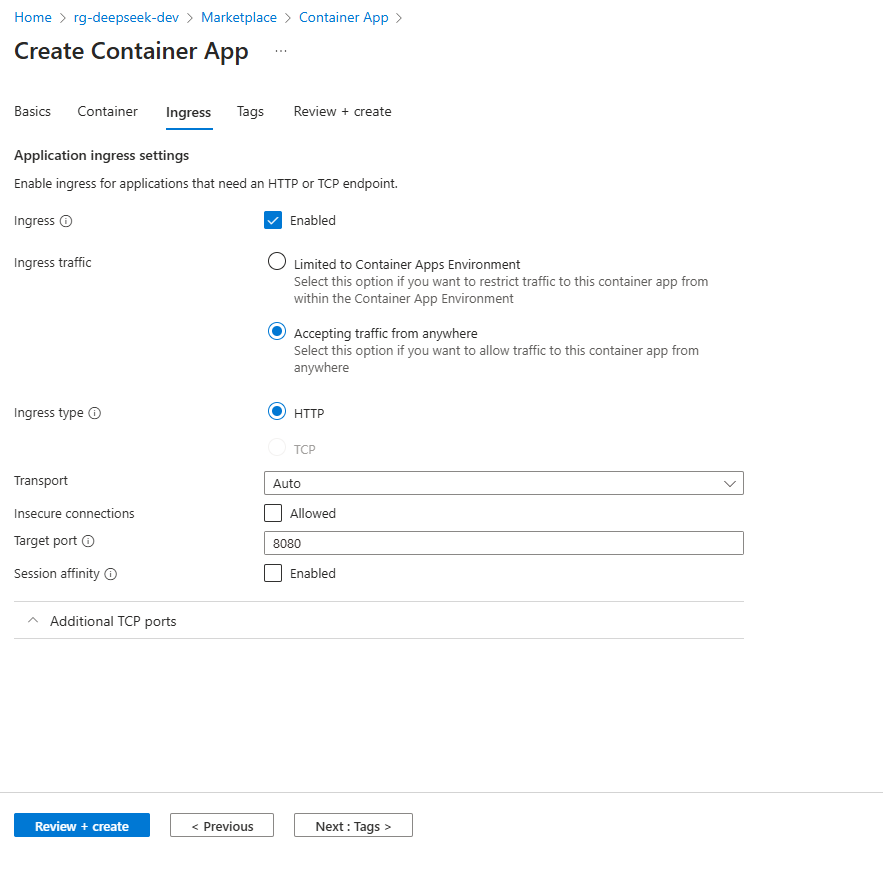

4. Configure Network Settings

- Enable Ingress

- Accepting traffic from anywhere

- Set Target Port to 8080

Now, your app will be accessible from anywhere!

Now, your app will be accessible from anywhere!

5. Deploy and Let Azure Do the Work

Hit "Create" and let Azure handle the deployment. No manual server setup, no Kubernetes headaches—just AI on demand.

Running DeepSeek-R1

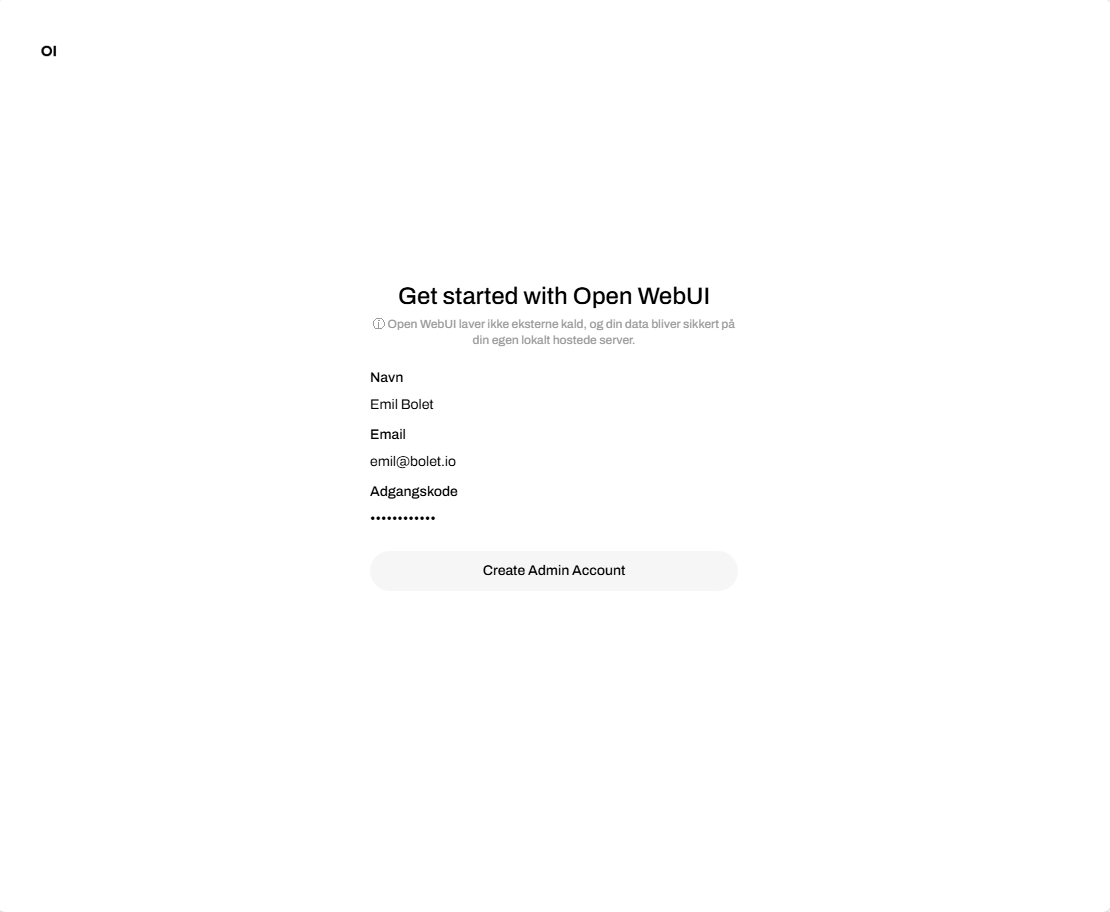

Once deployment is complete:

- Go to your Azure Container App

- Copy the Application URL and open it in a browser

- Follow the on-screen setup instructions

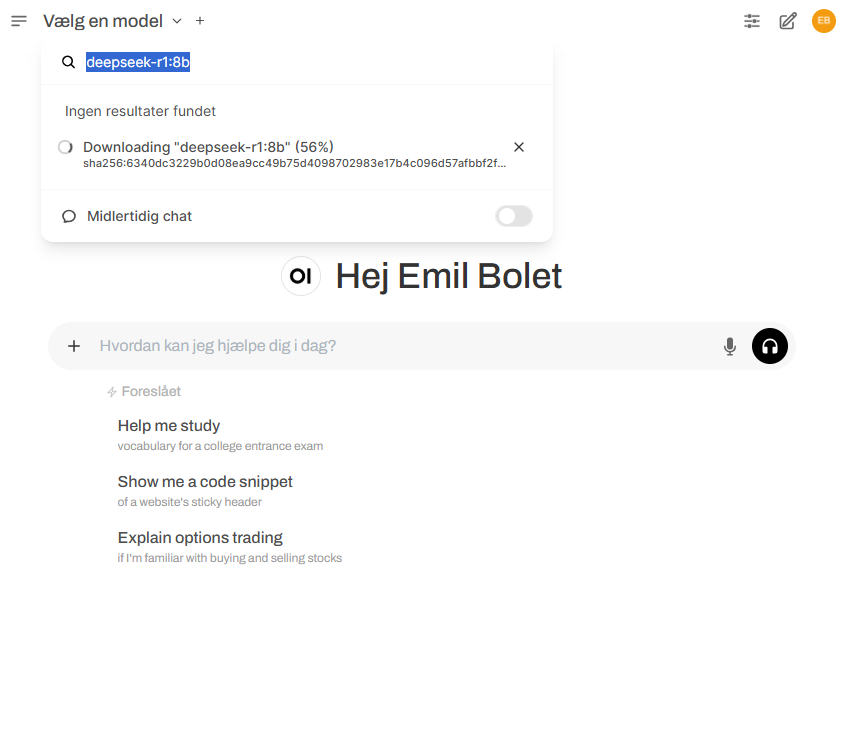

To load DeepSeek-R1, select a model:

- Big Brain Mode (14B Parameters):

deepseek-r1:14b - Lighter Version (8B Parameters):

deepseek-r1:8b

Click "Pull", wait for the model to download, and start chatting with AI.

Click "Pull", wait for the model to download, and start chatting with AI.

💡 Want other AI models? Browse the Ollama Model Library.

Optimizing Performance

If your app takes a while to start, try these:

- Host the model in Azure Container Registry (ACR) to reduce cold start times.

- Enable artifact streaming for faster model loading. Learn more.

Final Thoughts

Both Azure AI Foundry and Azure Container Apps provide excellent options for running DeepSeek-R1, depending on your needs. If you prefer a managed service with API-based integration, Azure AI Foundry is the best choice. However, if you want full control and containerized deployment, Azure Container Apps is the way to go.

For a deeper dive into Azure AI Foundry, check out the official Microsoft Tech Blog.

For an in-depth technical breakdown, check out the official Microsoft Tech Community Blog.

Whichever approach you choose, AI deployment on Azure has never been easier. Now go build something amazing!